Getting IP blocks and rate limits while scraping can kill your projects fast. Free proxy lists fail within hours, and manual rotation code turns into a mess. Building a web scraper in Python that stays undetected requires rotating proxies that act like real users.

Residential proxies solve this by switching IPs automatically, bypassing anti-bot systems without extra code.

We’ll show you how to set up proxy rotation using Decodo, turning your scraper into an unstoppable data collection machine.

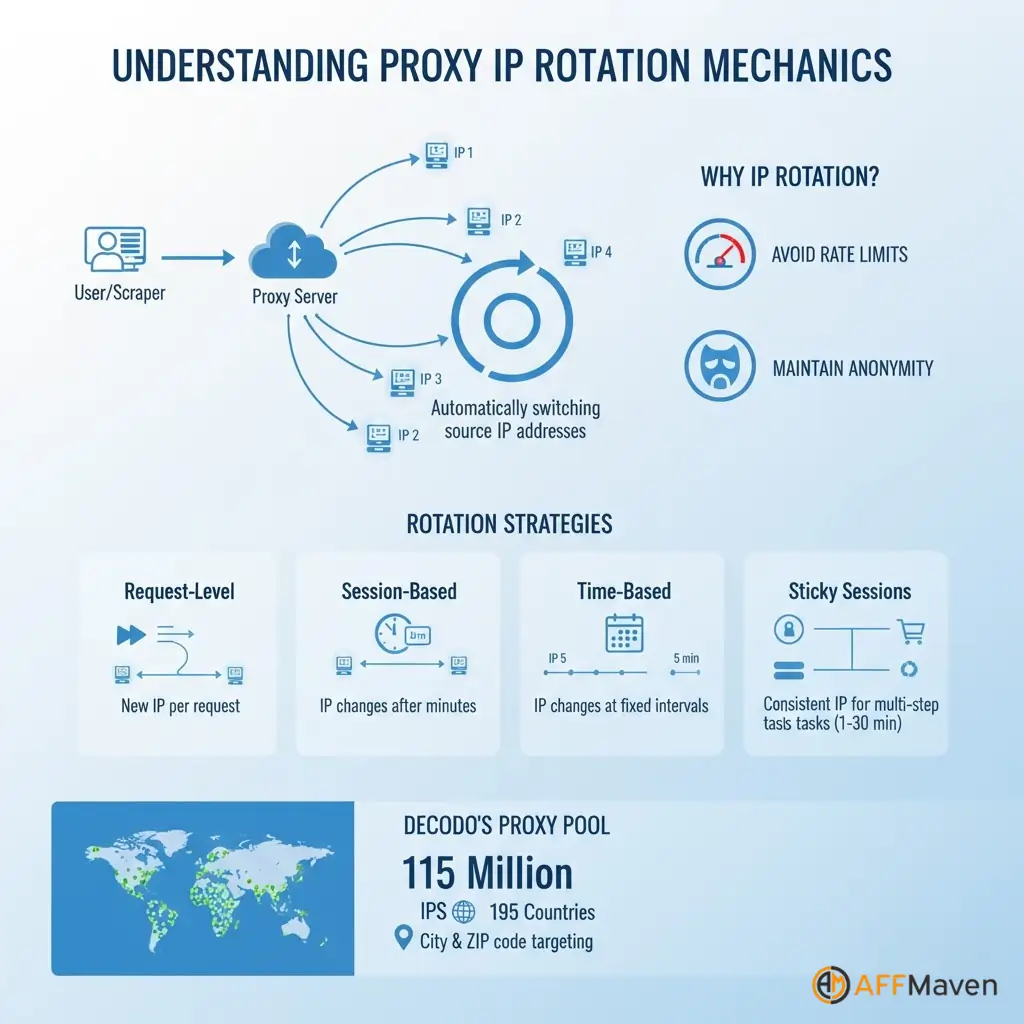

Understanding Proxy Rotation Mechanics

IP rotation means automatically switching the source IP address for each request or after set intervals. This technique distributes your scraping load across multiple addresses, preventing any single IP from triggering rate limits.

Different rotation strategies serve different needs:

Sticky sessions keep one IP address active temporarily for multi step processes like logging into accounts or completing transactions.

Decodo supports sticky sessions lasting from one minute up to 30 minutes, giving you flexibility for complex workflows.

The proxy pool concept provides access to millions of residential IPs from real devices worldwide. Decodo maintains a 115 million IP pool spanning 195 countries with granular targeting down to city and ZIP code levels.

Why Manual Rotation Creates Problems

Building rotation logic manually sounds straightforward but creates maintenance nightmares. You need to source proxy lists constantly since free proxies die quickly. Testing each proxy before use adds latency to every request.

Free proxy lists present serious issues:

Manual rotation demands custom code for selecting proxies, detecting failures, removing dead IPs, and retrying requests. This infrastructure work pulls focus away from actual data extraction tasks.

Smart Rotation with Decodo Residential Proxies

Decodo simplifies everything with managed proxy pools containing 115 million residential IPs automatically maintained across 195 locations.

The service provides automatic rotation built into the proxy endpoint, health monitoring that removes dead IPs, and geographic targeting for country, city, or ZIP code level precision.

| Feature | Manual Rotation | Decodo Solution |

|---|---|---|

| IP Pool Size | Limited, unstable | 115M+ residential IPs |

| Maintenance | Manual updates required | Automatic health monitoring |

| Success Rate | Variable, often low | 99.86% success rate |

| Geo-Targeting | Not available | Country, city, ZIP, ASN |

| Setup Time | Hours of configuration | Minutes with API |

Sign up for a Decodo account and go to the proxy dashboard. Copy your username, password, endpoint, and port from the credentials section.

pip install requests beautifulsoup4 lxmlimport requests

from bs4 import BeautifulSoup

import random

# Decodo rotating residential proxy configuration

DECODO_HOST = 'gate.decodo.com'

DECODO_PORT = 7000

DECODO_USERNAME = 'your_username'

DECODO_PASSWORD = 'your_password'

# Create session ID for sticky sessions

session_id = random.randint(1000, 9999)

# Format proxy URL with session management

proxy_url = f'http://{DECODO_USERNAME}-session-{session_id}:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'

proxies = {

'http': proxy_url,

'https': proxy_url

}

def scrape_with_rotating_proxy(url):

"""Scrape URL using Decodo rotating residential proxy"""

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

}

try:

response = requests.get(url, proxies=proxies, headers=headers, timeout=30)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'lxml')

return soup

except requests.exceptions.RequestException as e:

print(f"Error scraping {url}: {e}")

return None

# Scrape multiple URLs with automatic rotation

urls_to_scrape = [

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3'

]

for url in urls_to_scrape:

data = scrape_with_rotating_proxy(url)

# Process extracted dataDecodo handles IP rotation automatically with each new session ID, eliminating manual logic.

Geographic targeting routes requests through specific countries or cities:

# Route through US residential IPs only

proxy_url = f'http://{DECODO_USERNAME}-country-us:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'

# City-level targeting

proxy_url = f'http://{DECODO_USERNAME}-city-newyork:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'Session management for sticky sessions maintains the same IP across multiple requests:

# Use same proxy for 10 to 20 requests before generating new session

session_id = random.randint(1000, 9999)

proxy_url = f'http://{DECODO_USERNAME}-session-{session_id}:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'Request-level rotation generates maximum IP diversity:

def get_fresh_proxy():

session_id = random.randint(1000, 99999)

return f'http://{DECODO_USERNAME}-session-{session_id}:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'import time

from requests.adapters import HTTPAdapter

from urllib3.util.retry import Retry

def create_session_with_retries():

"""Create requests session with automatic retries"""

session = requests.Session()

retry_strategy = Retry(

total=3,

backoff_factor=1,

status_forcelist=[429, 500, 502, 503, 504]

)

adapter = HTTPAdapter(max_retries=retry_strategy)

session.mount("http://", adapter)

session.mount("https://", adapter)

return session

def robust_scrape(url, max_retries=3):

"""Scrape with automatic proxy rotation and error handling"""

session = create_session_with_retries()

for attempt in range(max_retries):

session_id = random.randint(1000, 99999)

proxy_url = f'http://{DECODO_USERNAME}-session-{session_id}:{DECODO_PASSWORD}@{DECODO_HOST}:{DECODO_PORT}'

proxies = {'http': proxy_url, 'https': proxy_url}

try:

response = session.get(url, proxies=proxies, timeout=30)

if response.status_code == 200:

return BeautifulSoup(response.content, 'lxml')

else:

print(f"Status {response.status_code} on attempt {attempt + 1}")

time.sleep(2 ** attempt)

except Exception as e:

print(f"Attempt {attempt + 1} failed: {e}")

time.sleep(2 ** attempt)

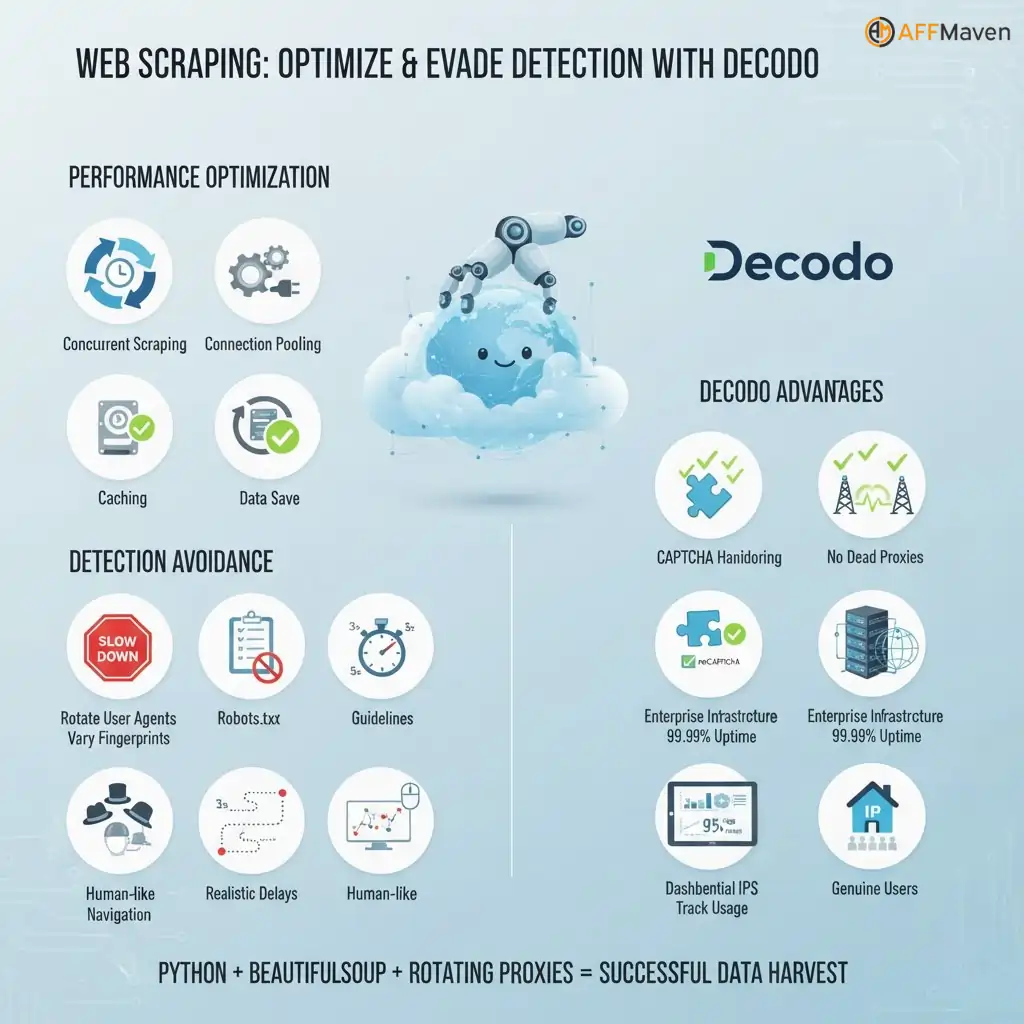

return NoneOptimization and Best Practices

Performance optimization techniques include concurrent scraping with threading for speed, connection pooling to reuse TCP connections, caching successful proxy sessions, and respectful rate limiting.

Avoiding detection beyond proxies:

Decodo-specific advantages:

Web scraping with Python using BeautifulSoup and rotating proxies creates production-ready scrapers that bypass blocks, handle CAPTCHAs, and access geo-restricted content.

The Decodo proxy service provides residential IPs that appear as genuine users, ensuring high success rates for large-scale data collection projects.

Making Your Scraper Bulletproof

You now have a production web scraper that handles 115 million IPs across 195 locations. Your code rotates addresses automatically, bypasses CAPTCHAs, and maintains a 99.86% success rate without manual intervention.

The proxy rotation strategy you built eliminates blocks and keeps your data collection running 24/7. Start with the free trial to test your setup, then scale to thousands of requests per hour.

What website will you scrape first with your new unblockable scraper?

Affiliate Disclosure: This post may contain some affiliate links, which means we may receive a commission if you purchase something that we recommend at no additional cost for you (none whatsoever!)