If you’re serious about ranking higher in 2026, you can’t afford to ignoreSERP scrapers. These tools are now the secret weapon for top affiliates, SEO pros, and media buyers who want to track live search data, spot keyword trends, and reverse-engineer what’s actually working for their competitors.

Over three-quarters of digital marketers are already using SERP scrapers for keyword tracking and to keep tabs on rival ad placements. But here’s the kicker: not all SERP scrapers are created equal, and using the wrong one (or using it the wrong way) can land you in hot water with IP blocks or even legal headaches.

In this guide, I’ll break down the smartest ways to use SERP scrapers in 2026, which tools give you the cleanest data, and how to dodge the common pitfalls—so you can stay ahead of the SEO game and keep your campaigns firing on all cylinders.

What Are SERP Scrapers?

SERP (Search Engine Results Page) scrapers are automated tools, often referred to as bots or spiders, designed to extract data from the pages search engines display in response to a query. These tools work by parsing the HTML code of the search results pages.

They can collect various types of information, including organic search rankings, paid ad details, page titles, URLs, meta descriptions, and other SERP features like “People Also Ask” boxes or featured snippets.

Essentially, a SERP scraper automates the process of gathering data from search engine results, which would otherwise require extensive manual effort, allowing for data collection at a much larger scale and speed.

Why SERP Scrapers Matter in 2026?

In 2026, SERP scrapers are crucial because they provide access to real-time, accurate data from search engines, which is vital for informed decision-making in the dynamic digital industry.

This data allows businesses and marketers to conduct effective SEO analysis, track keyword rankings, monitor competitors’ search performance and strategies, and identify market trends.

Manual collection of this data is often inefficient and nearly impossible at scale, especially with evolving search engine interfaces.

SERP scrapers enable organizations to optimize their online presence, refine content marketing efforts, enhance advertising campaigns, and gain insights into local search results by systematically collecting and analyzing search data.

SERP Scrapers That Deliver Real-Time SEO Insights

1. Oxylabs SERP Scraper API

Oxylabs SERP Scraper API, integrated within its Web Scraper API platform, provides reliable, real-time search engine data from 195 countries. It employs AI-driven proxy management and advanced anti-block technologies to efficiently scrape Google, Bing, Baidu, and Yandex, making it ideal for large-scale SEO and market analysis.

Key Features:

| Technical Specs | Details |

|---|---|

| Avg. Response | <10 seconds |

| Geo-Coverage | 195 countries |

| Engines | Google/Bing/Yandex |

| Output | JSON/HTML/CSV |

Pros:

Cons:

Pricing: Oxylabs’ Web Scraper API plans, which include SERP scraping, start from $49/month, billing only for successfully retrieved results.

Why We Chose Oxylabs: Its robust infrastructure, AI assistance, and comprehensive features ensure reliable, scalable SERP data collection for demanding projects.

How to Use Oxylabs SERP Scraper API: Register on Oxylabs, create API user credentials, then send POST requests to the https://realtime.oxylabs.io/v1/queries endpoint with your desired source (e.g., google_search), query, and geo-location parameters.

2. Decodo SERP Scraping API

Decodo’s SERP Scraping API delivers real-time search engine data, automating proxy management, CAPTCHA solving, and IP ban avoidance. Access structured JSON or HTML results from 195+ locations globally, perfect for SEO professionals and data analysts requiring reliable SERP intelligence.

Key Features:

| Technical Specs | Details |

|---|---|

| Success Rate | 100% |

| Avg. Response | ~5-6 sec |

| Locations | 195+ Global |

| Output Formats | JSON/HTML/Table |

Pros:

Cons:

Pricing: Decodo’s SERP Scraping API pricing starts from $0.08/1K requests, as part of their Web Scraping API solution.

Why We Chose Decodo: Decodo offers a cost-effective and reliable SERP API with excellent geo-targeting and a high success rate for diverse projects.

How to Use Decodo SERP Scraping API: Register on Decodo, get your API authentication credentials, and send POST requests to the https://scrape.decodo.com/v1/tasks endpoint, specifying parameters like target, query, locale, and geo.

3. ZenRows

ZenRows excels as an all-in-one SERP scraping tool, effortlessly bypassing anti-bot measures to deliver structured data from search engines like Google . It automates complex tasks, providing reliable search results for SEO analysis and market intelligence, ensuring your data pipeline remains uninterrupted.

Key Features:

| Technical Specs | Details |

|---|---|

| Avg. Success Rate | ~99.9% |

| Geo-Locations | 190+ Countries |

| Data Output | JSON/HTML |

| JS Rendering | Supported |

- High anti-bot bypass rate.

- Reliable structured SERP data.

- Extensive geo-targeting options.

- Simple API integration.

- Free trial has limitations.

- Can be pricier for beginners.

Pricing: Plans start at $69/month, with various tiers available; a free trial offers up to 1,000 requests.

Why We Chose ZenRows: Its robust AI anti-blocking, high success rates, and dependable structured SERP data delivery make it a top choice.

How to Use ZenRows:

4. Zyte API

Zyte API provides a comprehensive solution for web and SERP data extraction, automatically handling bans and rendering JavaScript. Its AI-powered parsing delivers structured data from complex sites, ideal for businesses needing reliable, scalable search engine insights and web data without manual proxy management.

Key Features:

| Technical Specs | Details |

|---|---|

| Ban Management | Automated |

| JS Rendering | Built-in |

| Data Output | Structured (AI) |

| Geo-Targeting | Yes (Country) |

Pros:

Cons:

Pricing: Zyte API uses a pay-as-you-go model; costs vary based on request complexity and volume.

Why We Chose Zyte API: Its all-in-one capability for unblocking, rendering, and AI-powered extraction simplifies complex SERP data gathering effectively.

How to Use Zyte API: Sign up for an API key, then send POST requests to the /extract endpoint with your target URL and desired parameters, receiving structured data.

5. NodeMaven

NodeMaven provides high-quality residential and mobile proxies optimized for SERP scraping and SEO monitoring. Their advanced IP filtering ensures clean, reliable connections, minimizing blocks and CAPTCHAs while gathering accurate search engine data from 150+ countries.

Key Features:

| Technical Specs | Details |

|---|---|

| Residential IPs | 30M+ |

| Mobile IPs | 250K+ |

| Avg. Success | 99.54% |

| IP Clean Rate | 95% |

Pros:

Cons:

Pricing: NodeMaven offers a trial for €3.99 (500MB) and plans start from €30/month for 5GB of residential proxy traffic.

Why We Chose NodeMaven: Its emphasis on high-quality, filtered IPs and reliable sticky sessions makes it excellent for consistent SERP data extraction.

How to Use NodeMaven: Sign up, choose a proxy plan, and configure your scraping tools or applications using the provided proxy gate.nodemaven.com address, port, and your credentials for HTTP or SOCKS5 protocols.

How to Avoid Google Blocks with SERP Scrapers?

Search engines like Google employ various measures to detect and block automated scraping. To minimize the chances of being blocked, several strategies can be employed:

- IP Address Rotation: Regularly changing the IP address used for requests helps prevent detection, as high volumes of requests from a single IP can trigger blocks. This makes requests appear as if they are coming from different users.

- User-Agent Simulation: Scrapers can mimic legitimate web browsers by varying the user-agent strings sent with each request. This makes automated requests harder to distinguish from normal human traffic.

- Mimic Human Behavior: Introducing random delays between requests, randomizing interaction patterns (like mouse movements if using headless browsers), and limiting the request rate can make scraping activity appear more natural and less bot-like.

- Handle CAPTCHAs: Implementing mechanisms or services to solve CAPTCHAs (Completely Automated Public Turing test to tell Computers and Humans Apart) can help bypass these common security challenges, though this is a reactive measure.

- Respect Website Policies: It’s important to review and, where possible, comply with a website’s terms of service and robots.txt file, which may outline rules regarding automated access. Scraping should be done responsibly to avoid overloading servers.

- Use Headless Browsers (When Necessary): For pages with content loaded by JavaScript, headless browsers can render the page fully before data extraction, ensuring all dynamic content is captured, similar to how a user’s browser would display it.

By employing these techniques, users can increase the reliability and consistency of their SERP data collection efforts while reducing the likelihood of encountering blocks.

Picking Your Best SERP Scraper

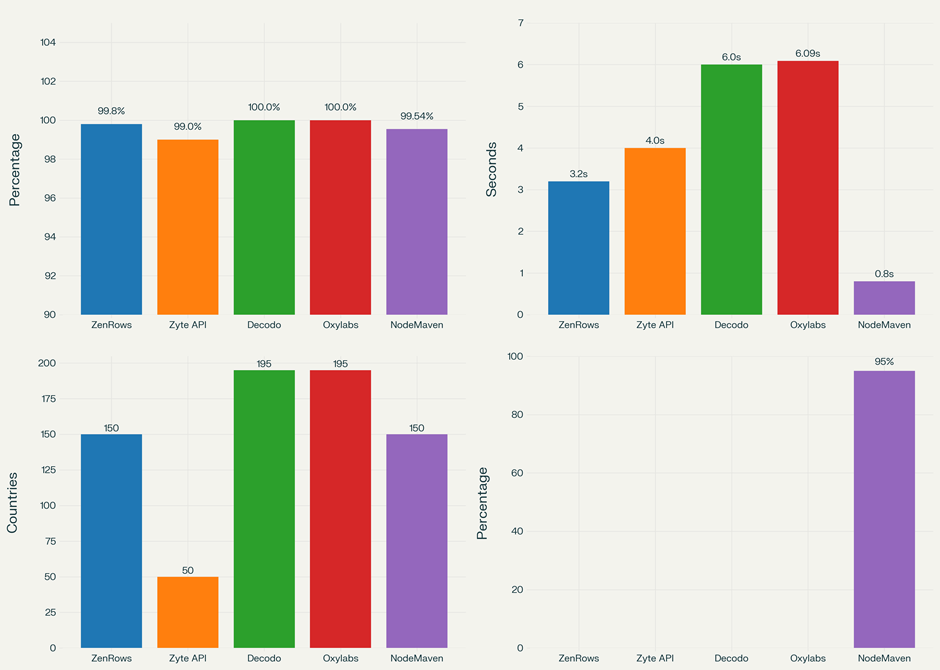

Choosing the right SERP scraper in 2026 means finding one that reliably gets you search data. Good tools offer strong anti-blocking, structured results, and cover many locations.

Look for high success rates (over 99%) and fast data delivery (0.8 to 6 seconds). If for big or small tasks, today’s scrapers give great access to search info, balancing cost and features. Pick based on your project’s size and budget.

Affiliate Disclosure: This post may contain some affiliate links, which means we may receive a commission if you purchase something that we recommend at no additional cost for you (none whatsoever!)

![12 Best Countries for Proxy Server Hosting [2026 Update] 16 Best Proxy Server Hosting Countries](https://affmaven.com/wp-content/uploads/2024/10/Best-Proxy-Server-Hosting-Countries-1024x512.webp)